Research Supervision

A transferable and AI-enabled software security framework

Sadegh Bamohabbat Chafjiri (PhD candidate), 2022

Funded by UWE College of Art, Technology and Environment

Recent cyber security incidents (e.g., “Wannacry”), caused by software vulnerabilities, showcased the necessity of proactive program analysis. Vulnerability discovery methodologies aim to identify software weaknesses by analysing software, either statically or dynamically. These weaknesses can be leveraged by an attacker who aims to access and/or compromise systems without authorisation. During the 90s, a novel vulnerability detection method for UNIX systems called “fuzzing” was proposed. Fuzzers are systems that feed assessed code with invalid data (generating random input) aiming to discover new vulnerabilities. Fuzz testing is considered as one of the most important techniques for discovering zero-day vulnerabilities and it is rapidly growing in popularity among the cyber security community. This research project aims to investigate the feasibility and effectiveness of a transferable framework that will be able to leverage Machine Learning to efficiently perform fuzz testing in various systems.

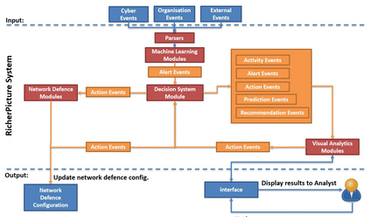

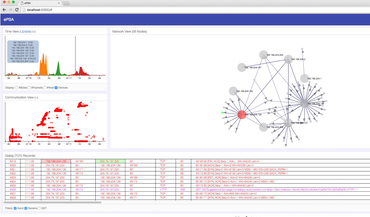

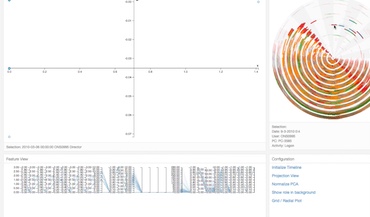

Cyber Security Analytics in Telecommunications systems: Managing security and service in complex real-time 5G networks

James Barrett (PhD candidate), 2022

Funded by Ribbon Communications Ltd. and UWE Partnership PhD scheme

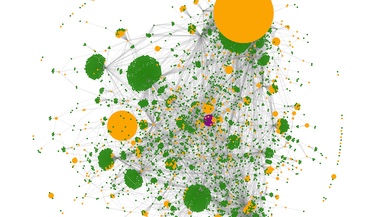

Our digital society is dependent on complex dynamic telecommunications systems that support our activities and interactions, with increasingly more devices being connected and communicating each day. Managing these systems is a challenging task with time critical needs to provide real-time functionality to services – including healthcare, transport, finance, socialising, and other forms of interaction. Analysts need to ensure that networks are functional, from physical layers through to networking and application layers. At the same time, analysts need to identify and mitigate against threats which can materialise as denial-of-service attacks, targeted sabotage of users, or leaks of confidentiality. In recent years, machine learning techniques have been applied to manage service level provisions, and yet security threats continue to challenge this domain. A major challenge in this domain is to establish cyber situational awareness, in terms of the current landscape and the anticipated future events, and how to effectively integrate human-machine collaboration to best utilise machine learning approaches whilst enabling analysts to best home in on contextual aspects of security threats, all the while doing so in a real-time manner that causes little or no disruption to the end-user service. This research will explore the current trends of machine learning and communication networks, recognising the role of ML as an enabler of cyber security but also as introducing another potential attack vector. In this manner, analysts need to determine how best to collaborate with the system, to identify what should be automated, what should be human-assisted, and what should be human-led investigation. Fundamentally, the challenge is to inform real-time decision making and actions of how the underlying network configuration performs ensuring both service and security, and the implications of this on the end users.

Methods for improving Robustness against Adversarial Machine Learning Attacks

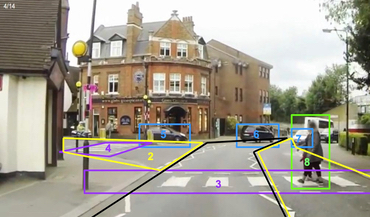

Andrew McCarthy (PhD candidate), 2019

Funded by Techmodal Ltd. and UWE Partnership PhD scheme

Machine learning systems are improving the efficiency of real-world tasks including in the cyber-security domain; however, Machine Learning models are susceptible to adversarial attacks, indeed an arms race exists between adversaries and defenders. We have accepted the benefits of these systems without fully considering their vulnerabilities. resulting in vulnerable machine learning models currently deployed in adversarial environments. For example, intrusion detection systems that are relied upon to accurately discern between malicious and benign traffic can be fooled into allowing malware onto our networks. This thesis tackles the urgent problem of improving the robustness of machine learning models against adversarial attacks, enabling safer deployment of machine learning models in adversarial and more critical domains. The logical output of this research are countermeasures to defend against adversarial examples. My original contributions to knowledge are: a Systematization of knowledge for adversarial machine learning in an intrusion detection domain, a generalizable approach for assessing the vulnerability and robustness of features for stream based intrusion detection systems, a constraint-based method of generating functionality-preserving adversarial examples in an intrusion detection domain. Novel defences against adversarial examples: Feature Selection using Recursive Feature Elimination, Hierarchical classification. A primary focus of this work is how adversarial attacks against a machine learning classifier can translate to non-visual domains, such as cyber security, where an attacker may exploit weaknesses in an intrusion detection system classifier, enabling an intrusion to masquerade as benign traffic. Systems that can be easily fooled are of limited use in critical areas such as cybersecurity. In future even more sophisticated adversarial attacks could be used by ransomware and malware authors to evade detection by machine learning Intrusion Detection Systems. We advocate for more robust models and experiments in this Thesis use Python code and python libaries: the CleverHans API, and the Adversarial Robustness Toolkit libaries to generate adversarial examples, and HiClass to facilitate Hierrchical Classification. focusing on intrusion detection case-studies. An adversarial arms race is playing out in intrusion detection systems. Every time we improve defences, adversaries, find new ways to breach our networks. Currently one of the most critical holes in our defences are adversarial examples. This thesis aims to examine the problem of robustness against adversarial examples for neural networks, helping to enable the deployment of neural networks in more critical domains.

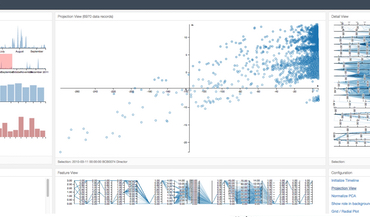

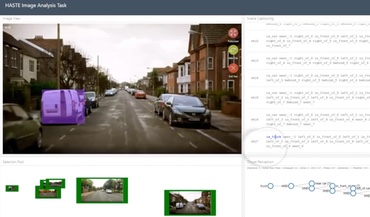

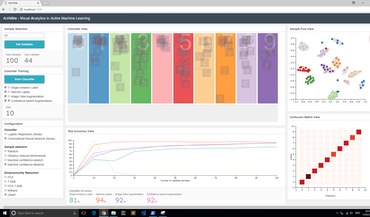

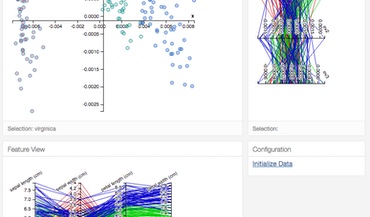

Creating Machine Intelligence with Intelligent Interactive Visualisation

Sinclair-Emmanuel Smith (PhD candidate)

Funded by Montvieux Ltd. and UWE Partnership PhD scheme

I also have responsibility of supervising staff DPhil research activity.